[AINews] LMSys advances Llama 3 eval analysis • ButtondownTwitterTwitter

Chapters

Twitter, Reddit, and Discord Recaps

Multimodal AI and Generative Modeling Innovations

CUDA Mode Discord

AI Discord Channels Highlights

Innovations and Discussions in the AI Community

WorldSim's Grand Resurgence

Computer Vision Discussion

Clarifying Model Directory Structure

Diving into Various LM Studio Discussions

CUDA Mode Discussions

Diverse Discussions in AI Communities

OpenAccess AI Collective Updates

Cohere, Mozilla AI, LLM Perf Enthusiasts AI

Twitter, Reddit, and Discord Recaps

The AI Twitter recap includes updates on AlphaFold 3, OpenAI Model Spec, and Llama 3's performance. The AI Reddit recap covers developments such as the OpenAI and Microsoft AI supercomputer project, IBM's Granite Code outperforming Llama 3, and Apple's M4 chip. In the AI Discord recap, advancements in Large Language Models, optimizing inference and training, and efforts in open-source AI frameworks and community initiatives are highlighted.

Multimodal AI and Generative Modeling Innovations

Multimodal AI and Generative Modeling Innovations:

- Idefics2 8B Chatty focuses on elevated chat interactions, while CodeGemma 1.1 7B refines coding abilities.

- The Phi 3 model brings powerful AI chatbots to browsers via WebGPU.

- Combining Pixart Sigma + SDXL + PAG aims to achieve DALLE-3-level outputs, with potential for further refinement through fine-tuning.

- The open-source IC-Light project focuses on improving image relighting techniques.

CUDA Mode Discord

LibTorch Compile-Time Shenanigans: Using ATen’s at::native::randn and including only necessary headers like <ATen/ops/randn_native.h> significantly reduced compile times.

ZeRO-ing in on Efficiency: ZeRO++ claims a 4x reduction in communication overhead for training large models on NVIDIA's A100 GPUs.

Tuning Diffusion Models to the Max: A 9-part blog series showcases optimization strategies in U-Net diffusion models for the GPU.

vAttention Climbing Memory Management Mountain: vAttention proposes a system for dynamic KV-cache memory management in language model inference.

Apple's M4 Flexes Compute Muscles: Apple's M4 chip boasts 38 trillion operations per second, showcasing advancements in mobile compute power.

AI Discord Channels Highlights

Mozilla AI Discord

- API Now, Code Less: Meta-Llama-3-8B-Instruct operates through an API endpoint at localhost. Details available on the project's GitHub page.

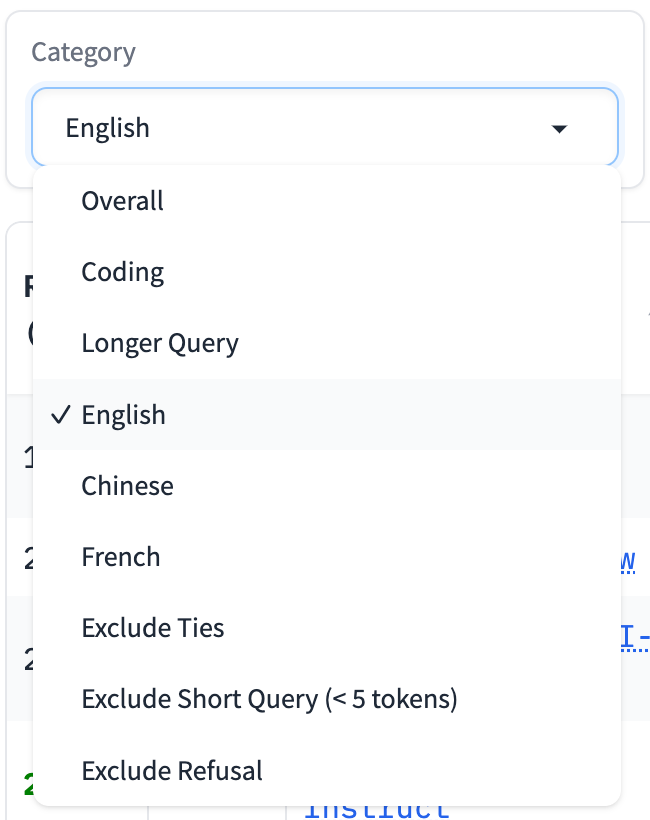

- Switching Models Just Got Easier: Visual Studio Code introduces a dropdown feature for model swapping.

- Request for Efficiency in Llamafile Updates: Feature request to update the binary scaffold of llamafile efficiently.

LLM Perf Enthusiasts AI Discord

- AI Aides Excel Wizards: Exploring LLMs for spreadsheet data manipulation.

- Yawn.xyz's Ambitious AI Spreadsheet Demo: Community feedback indicates performance issues.

- Seeking Smooth GPT-4-turbo Azure Deployments: Engineer faces issues with GPT-4-turbo in Sweden Azure region.

Alignment Lab AI Discord

- AlphaFold3 Enters the Open Source Arena: Implementation of AlphaFold3 in PyTorch for predicting biomolecular interactions. Available on GitHub for review and contribution.

- Agora's Link for AlphaFold3 Collaboration Fails to Connect: Reported faulty link hinders collaboration.

Skunkworks AI Discord

- AI Engineers, Check This Out: YouTube video link shared without context.

AI Stack Devs (Yoko Li) Discord

- Quickscope Hits the Mark: Introduction of Quickscope, an AI-powered toolkit for game testing automation.

- Deep Dive Into Game Test Automation: Quickscope's property scraping feature enables detailed data extraction.

- A Testing Platform for QA Teams: Quickscope's smart replay systems designed for QA teams.

- Interactive UI Meets Game Testing: Platform's intuitive UI compatible with Unity editor.

- Experiment with Quickscope: Engineers encouraged to try Quickscope's suite of AI tools.

Datasette - LLM (@SimonW) Discord

- Command-Line Cheers: Appreciation for the llm command-line interface tool.

Innovations and Discussions in the AI Community

IBM Granite collection is receiving attention in the AI community, particularly around the unusual GPTBigCodeForCausalLM architecture. Unsloth AI featured in Hugging Face's Dolphin Model. Discord users discuss challenges with AI models on Windows and concerns regarding model benchmarks. OpenAI collaborates with Stack Overflow for LLM database usage. Members address content depletion concerns for AI training, proposed startup projects, and recommended strategies for profitable startups. Discussions on continual training techniques, finetuning logistics, RoPE theta modification, and chaining finetune tasks raise interesting insights in the AI research community with scaled LSTM models, new LLM releases, surprising model efficiencies, future AI research trends, and OpenAI's model spec for reinforcement learning.

WorldSim's Grand Resurgence

The WorldSim platform has been revitalized with bug fixes and new features, including WorldClient, Root, Mind Meld, MUD, tabletop RPG simulator, enhanced capabilities, and model selection options. Users can now experience a personalized Internet 2, simulate a CLI environment, play text-based adventure games, and more. Discussions invite users to explore further and share thoughts on the new WorldSim via a dedicated Discord channel.

Computer Vision Discussion

The Computer Vision channel in the HuggingFace Discord server had various discussions and shared resources related to computer vision. Some topics included detecting ads in images with the Adlike library, object detection guides enhancements, visual question answering challenges, text-to-image models, and extracting content from information-dense PDFs. Members engaged in discussions about tools and methods to improve computer vision tasks and shared links to relevant resources and repositories.

Clarifying Model Directory Structure

Members discussed the proper directory for storing models in LM Studio, which involves a top-level

\models folder with subfolders for each publisher and respective repository similar to Hugging Face's naming convention. For example, a model file should be located at

\models\lmstudio-community\Meta-Llama-3-8B-Instruct-GGUF\Meta-Llama-3-8B-Instruct-Q8_0.gguf.

Diving into Various LM Studio Discussions

Several LM Studio discussions were held covering topics such as model search bars, model recommendations, updates on LM Studio experiences, model frustrations, and more. Members engaged in conversations about changing model paths, seeking vision model recommendations, optimal models for coding, awaiting updates eagerly, and poetry model frustrations. Additionally, users discussed RAG architectures and smart chunk selection to enhance search, delved into parsing and sparse contexts, llama.cpp issues, bottleneck mysteries, LLM inference engines, and the slow lane of computing traffic. Hardware discussions touched on desktop optimization, decoding hardware requirements, cost-efficiency of offline model use, choosing the right LLM for Apple M1, and AI capabilities beyond LMs. A self-merge masterpiece announcement, dev chat on chat interactions, and ethical early implementations were featured. Under Eleuther research, xLSTM paper skepticism, function vectors for in-context learning, YOCO decoder-decoder architecture, and multilingual LLM cognition were explored. The community also shared perspectives on simplifying KV caches, relooking at positional encodings, and discussing absolute positions. Finally, in CUDA MODE, conversations revolved around dynamic vs static compiling, diffusion inference optimizations, and turning GPU coil whine into music.

CUDA Mode Discussions

The CUDA Mode channel discussions cover various topics related to CUDA optimization and performance enhancements. In one discussion, the community explores ways to optimize CUDA Matmul Kernel for cuBLAS-like performance. In another discussion, the focus is on Triton, including GitHub collaborations, tutorials, and understanding Triton's programming model compared to CUDA. The section also delves into optimizing PyTorch performance, exploring cpp extensions, libtorch vs. extensions, and model caching with AOTInductor. Lastly, there are discussions on advanced memory management systems like vAttention, boosting LLM inference with quantization, and CLLMs for speedier inference. The aim is to enhance efficiency and performance in large language model training.

Diverse Discussions in AI Communities

This section provides insights into various discussions happening in different AI community channels. From discussions about AI models such as Groq's API and Gemini Pro Vision to evaluations of different local models like TheBloke/deepseek-coder and mixtral-8x7b-instruct, the community members share their experiences and findings. Other topics include fine-tuning models, automating insurance data processing, AI contests, and more. Members also explore different AI tools and frameworks like LlamaIndex, OpenRouter, LangChain, and CrewAI, discussing integration challenges, model hosting, and optimization strategies.

OpenAccess AI Collective Updates

The OpenAccess AI Collective section includes updates on various discussions and developments within Discord channels. The highlights include the release of RefuelLLM-2 by RefuelAI, the discussion on dataset formats supported by Axolotl, and the conversation on error resolution while training on GPUs. Additionally, the section covers insights on reinforcement learning from human feedback by OpenAI, achievements of Meta's Llama 3-70B in the Chatbot Arena, and discussions related to the tinygrad project focusing on unary operations and symbolic implementations.

Cohere, Mozilla AI, LLM Perf Enthusiasts AI

The section includes discussions from various Discord channels on topics related to Cohere, Mozilla AI, and LLM Perf Enthusiasts AI. In Cohere, users tackled challenges with RAG implementation, generating downloadable files, resolving CORS concerns, adding credits, and requesting a dark mode for Coral. Mozilla AI discussed backend service implementation, VS Code integration with ollama, updating Llamafile, and curious clown banter. Lastly, LLM Perf Enthusiasts AI explored AI assistance in spreadsheet analysis, Yawn.xyz's approach to extracting data, and Azure regions for GPT-4-turbo. Alignment Lab AI shared the open-source implementation of AlphaFold3, and Skunkworks AI included a link to a YouTube video. AI Stack Devs discussed the launch of Quickscope by Regression Games, deep property scraping, discovering the Quickscope platform, and tools highlights for efficient testing.

FAQ

Q: What is the Phi 3 model and what does it bring to browsers via WebGPU?

A: The Phi 3 model brings powerful AI chatbots to browsers via WebGPU.

Q: What is the IC-Light project focused on?

A: The open-source IC-Light project focuses on improving image relighting techniques.

Q: How does ZeRO++ claim to improve efficiency in training large models on NVIDIA's A100 GPUs?

A: ZeRO++ claims a 4x reduction in communication overhead for training large models on NVIDIA's A100 GPUs.

Q: What is the purpose of the vAttention proposal in language model inference?

A: vAttention proposes a system for dynamic KV-cache memory management in language model inference.

Q: What are some of the key features of Apple's M4 chip?

A: Apple's M4 chip boasts 38 trillion operations per second, showcasing advancements in mobile compute power.

Q: How has LibTorch Compile-Time been optimized?

A: Using ATen’s `at::native::randn` and including only necessary headers like `<ATen/ops/randn_native.h>` significantly reduced compile times.

Q: What are some of the highlighted advancements in the AI Discord recap?

A: Advancements in Large Language Models, optimizing inference and training, and efforts in open-source AI frameworks and community initiatives are highlighted in the AI Discord recap.

Q: What is the significance of the Idefics2 8B Chatty and CodeGemma 1.1 7B models in the context of generative modeling?

A: Idefics2 8B Chatty focuses on elevated chat interactions, while CodeGemma 1.1 7B refines coding abilities in the context of generative modeling.

Q: What unique capability does the Pixart Sigma + SDXL + PAG combination aim to achieve?

A: Combining Pixart Sigma + SDXL + PAG aims to achieve DALLE-3 level outputs, with potential for further refinement through fine-tuning.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!